In this blog, we discussed how to build some simple neural networks from scratch. Find the full implementation here.

Implement the backpropagation algorithm with sigmoid activation function in a single-layer neural network. The output layer should be a softmax output over 10 classes corresponding to 10 classes of handwritten digits.

Detailed implementation is here.

Train an RBM model with 100 hidden units, starting with the CD with k = 1 step. For initialization use samples from a normal distribution with mean 0 and standard deviation 0.1.

Detailed implementation is here.

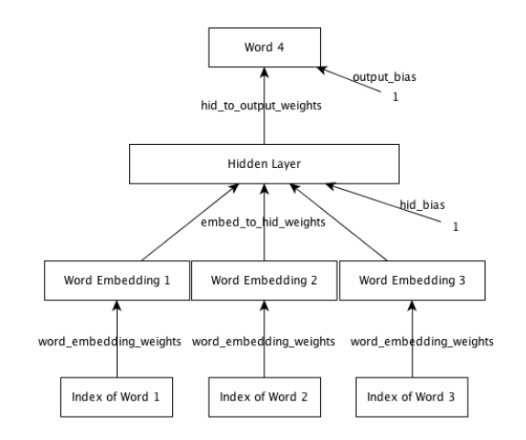

A 4-gram language model using a Multilayer Perceptron with an embedding layer is built according to Figure 1. For each of the 4-word combinations, the next word is predicted given the first three words.

We first sorted the tokens by frequency, and only saved the top 8000 tokens; then we added "START" and "END" tags to the start and end of sentences. For the tokens that are not in the 8000 word of bags above, they are replaced by "UNK".

Detailed implementation is here.